How To Use Gestures To Control Apple Vision Pro

How to use hand gestures to control Apple Vision Pro

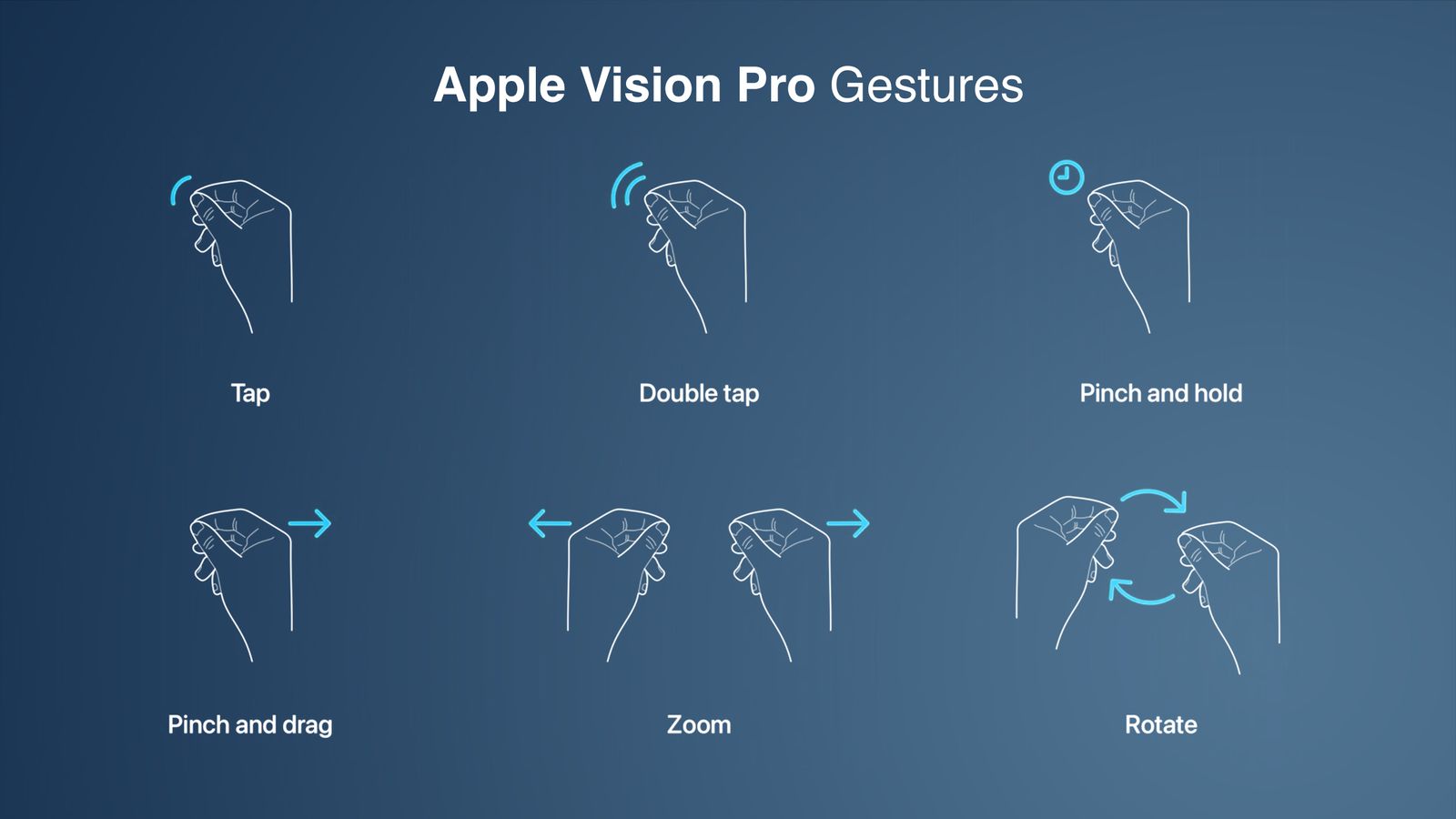

Apple has provided 6 hand gestures that will let you control the Vision Pro software easily. Let’s check them out here:

Important Apple Vision Pro gesture details

After learning about the 6 hand gestures to control the Vision Pro headset, you must also understand a few more intricate details about the gadget.

Role of eye movements

Hand gestures will work in cooperation with your eye movements. This will be facilitated by the powerful cameras placed in the Apple Vision Pro.

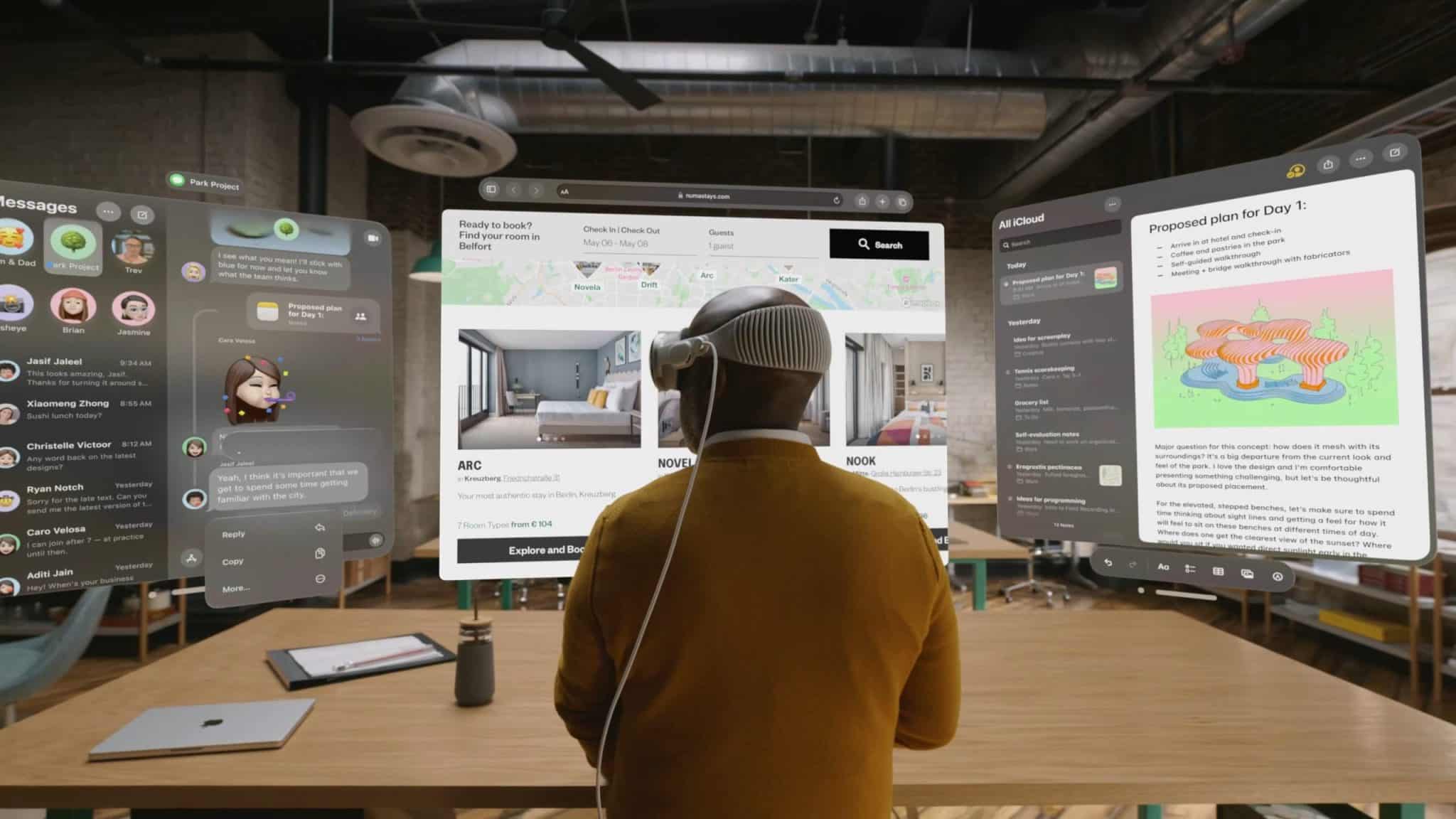

Per Apple’s announcement, Vision Pro has a stack of 12 stunning cameras and five sensors to track hand gestures, eye movements, and real-time 3D mapping of the surrounding environment. Two cameras will support a display with over a billion pixels per second to showcase a clear virtual world, while the other lenses will help in hand tracking, 3D mapping, and head tracking. Also, eye positions will influence pointing at the objects or tasks you are willing to achieve through hand gestures. For instance, looking at a particular object on the virtual screen will target and highlight it. You can then use hand gestures for more detailed output.

Hand positions

Further, you don’t need to keep your hands always in midair while operating Vision Pro. Feel free to place them in your lap and avoid tiring your hands. In fact, Apple is motivating people to avoid grand gestures that could strain their hand muscles. The robust camera and sensor setup installed on the headset can precisely and accurately track even the lightest of your motions. With Vision Pro, you can manipulate and select objects in proximity or away from you. However, Apple believes you might prefer using big gestures to control the virtual space in front of you. Surprisingly, you can explore and use your fingers to use a virtual object. For instance, if you want to scroll through your Safari window, you can reach your hand out and scroll rather than use hand gestures. Vision Pro is also expected to support hand movements like typing in the air. Everything will work together to give a realistic touch to the virtual space. Suppose you are drawing a cat virtually. For this, you will look at your preferred point at the display, pick a tool with your hand, and draw using hand gestures. With your eye movements, you can move the cursor around.

Room for creativity

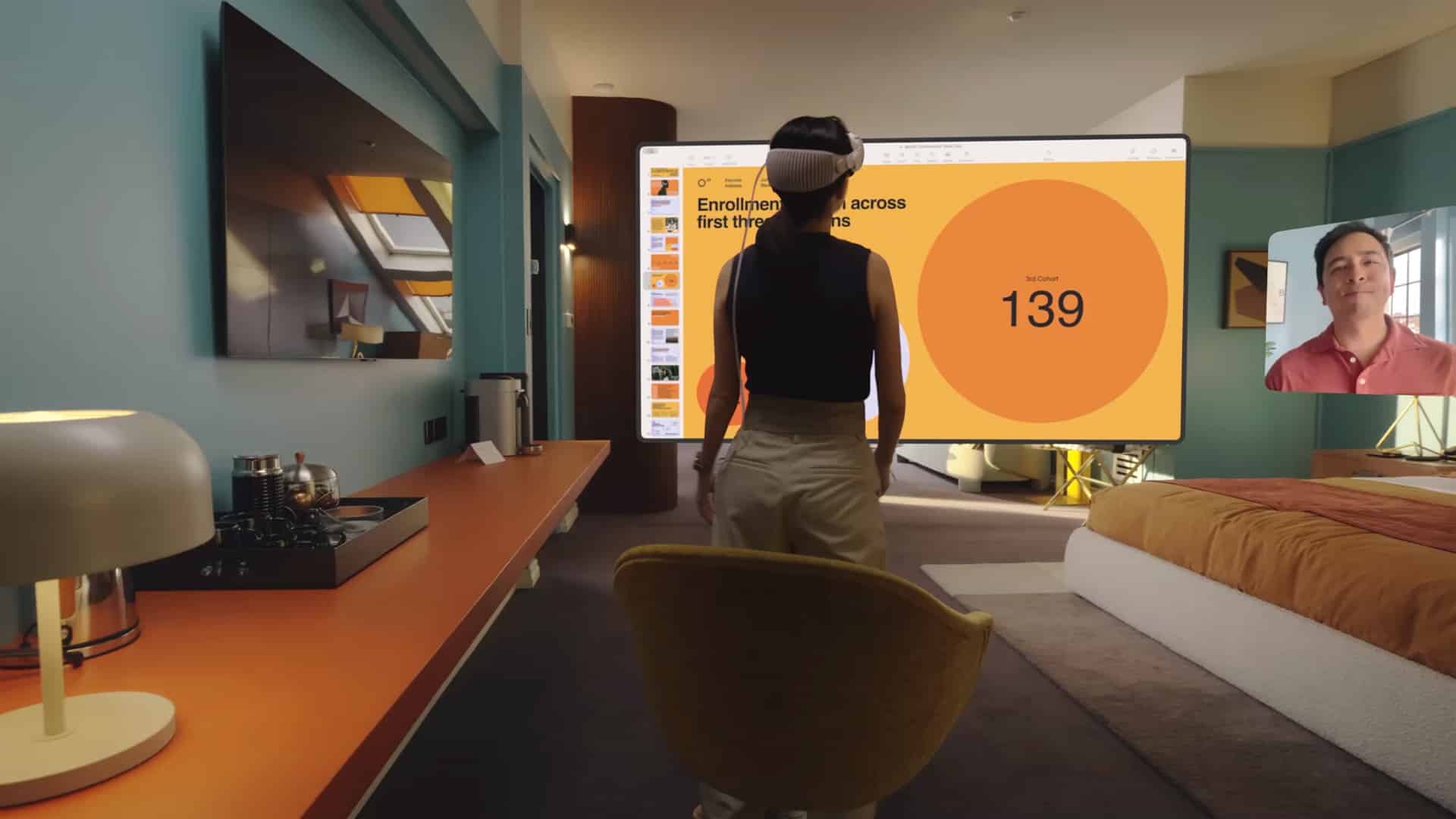

Apart from the given-above six gestures, developers can custom-create multiple gestures of their choice for their apps. However, the developers must ensure that their customized gestures are unique from the main ones and do not add pressure to their hands during work. Apple Vision Pro will also support Bluetooth keyboards, trackpads, mice, and game controllers. In addition, the device has voice-centric search and dictation tools. Developers who used the Vision Pro firsthand have given a big ‘thumbs-up’ to Apple’s creativity. The headset strives to incorporate fluid touch controls using fingers, as on iPhones and iPads. It’s intuitive, intelligent, and immersive like other Apple products, but with added credit points. The product is slated for release on February 2, 2024. So, let’s wait for it. Once you get to experience your Vision Pro, let us know how much you like the control gestures! Read more:

Interesting Apple Vision Pro features How to record spatial video on iPhone 15 Pro Best Apple Vision Pro accessories in 2024

🗣️ Our site is supported by our readers like you. When you purchase through our links, we earn a small commission. Read Disclaimer. View all posts

Δ